The Office of Institutional Performance and Assessment (IPA) has assisted many faculty and staff with various aspects of Institutional Effectiveness and outcomes assessment. Below are some of the frequently asked questions that we received over the years and our answers to those inquiries. Most answers contain links to additional information and resources. Please reach out to us with your suggestions for other questions that should be included on this list.

What is Institutional Effectiveness?

A planning, implementation and assessment process that allows us to evaluate whether our practices are meeting our goals. Institutional Effectiveness (IE) activities help assess performance and provide university accountability. The IE process reinforces instructional and administrative quality and effectiveness through a systematic review of goals and outcomes that are consistent with FSU's mission.

Why do we evaluate Institutional Effectiveness?

"Student outcomes - both within the classroom and outside of the classroom - are the heart of the higher education experience. Effective institutions focus on the design and improvement of education experiences to enhance student learning and support appropriate student outcomes for its educational programs and related academic and student services that support student success. To meet the goals of educational programs, an institution is always asking itself whether it has met those goals and how it can become even better." (SACSCOC Resource Manual, page 65).

The IE process is a key way to measure how well we are meeting institutional goals. We formally assess our IE for three main reasons:

1. To self-evaluate and improve,

2. To demonstrate the product of our efforts to the public and campus community,

3. To meet the requirements for accreditation.

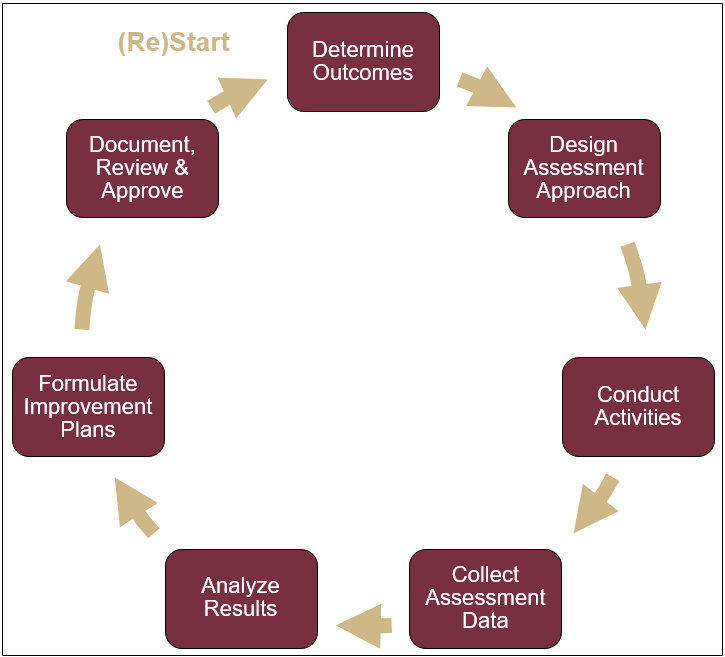

How do we assess Institutional Effectiveness?

Every campus unit sets its own annual performance goals that are measured and evaluated to determine how well they performed in a given year on those goals.

POs - All university units (academic, administrative, and academic and student support services) define and set expectations for their program outcomes. POs are the broader goals of the unit and may align with FSU Strategic Plan implementation, state funding metrics, strategic plans of the unit's College or Division, the requirements of discipline-specific accrediting agencies, and/or the unit's core/functional plans (for example, FSU Master Plan or FSU Emergency Management Plan).

SLOs - Academic programs develop student learning outcomes that specify knowledge, skills, values, and attitudes that students will attain throughout their studies in a program or in a specific course. Assessment methods and desired levels of student competencies are established in accordance with discipline-specific expectations and levels that are appropriate for post-graduation success. When feasible, SLOs can be written to conform to the requirements of discipline-specific accrediting agencies.

When do we assess Institutional Effectiveness?

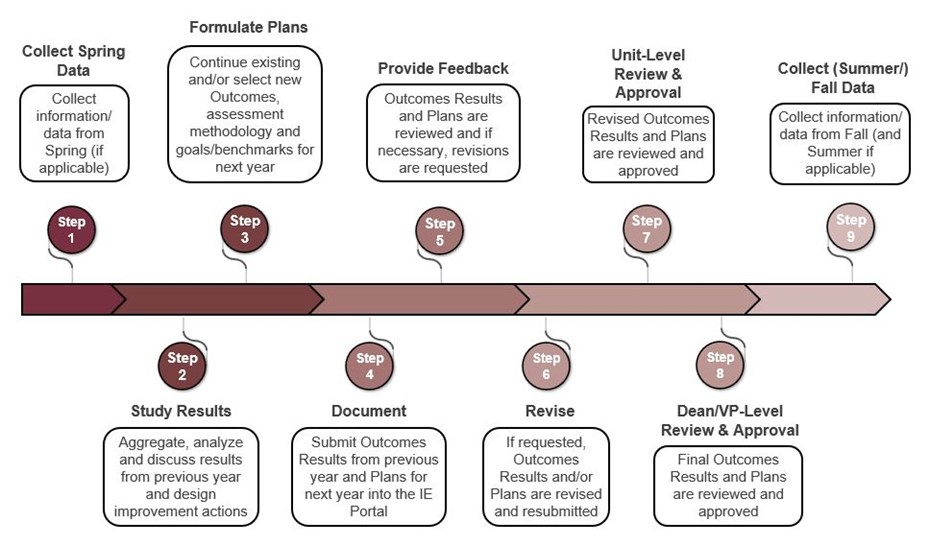

While the process of improvement is always continuous and ongoing, we only document results and analysis of Program Outcomes and Student Learning Outcomes once a year, at the end of each unit’s assessment cycle. Each campus unit determines the best start and end dates for their IE timeline. Generally, educational programs operate on academic year timelines. Common academic year cycle timeframes are: 1) Fall and Spring semesters, 2) Fall, Spring, Summer semesters, 3) Summer C, Fall, Spring, Summer A & B semesters.

The general timeline for completing various components of the assessment cycle is visualized below. Each university Division and College has its own recommended calendar for engaging in each step of the process. All campus units are allowed and encouraged to complete their assessment process before the specified deadlines.

Who governs Institutional Effectiveness?

The Office of the Provost and Executive Vice President is responsible for the overall coordination of the university assessment process. The Office of Institutional Performance and Assessment (IPA) within the Office of the Provost provides assistance to FSU units during all stages of their IE assessment cycles. The final review and approval of entries in the IE Portal is the responsibility of the Executive Vice President for Academic Affairs (or designee).

Typically, each unit designates one or two people as assessment coordinators who lead and manage the assessment process and implementations of improvements at the level of their unit. However, it is expected that all members of the unit understand, provide input for, agree with, and participate in the IE process. The IE assessment is a shared responsibility between faculty/staff, the assessment coordinators, the department chair/office director, and the associated dean/division VP. As such, they all are involved in an annual workflow that assures that defined Outcomes are appropriately designed, measured, analyzed, and reported in a timely fashion. Each unit creates an assessment governance structure most suitable to its size and functions.

Prior to or shortly after a unit's assessment coordinators submit the description of the IE assessment components into the IE Portal, department chairs/office directors (or designees) review and approve submissions. The final review and approval should be conducted by the college dean/division VP (or authorized designee). Suggested rubrics for evaluating the IE submissions are developed and distributed by the IPA Office on its website.

How do I complete my annual reporting in the IE Portal?

1. Login to the IE Portal at iep.fsu.edu. If you do not yet have access to the IE Portal, email ipa@fsu.edu to request it.

2. Use the drop-down menu at the top of the page to select the appropriate educational program, administrative office, or student support service unit.

3. Use the navigation menu in the upper left corner to access the page “Outcomes Assessment: Plans and Results”.

4. On the resulting page, you will see all of the Outcomes for the selected unit. Double click on any Outcome to view its associated assessment plans and prior years’ results entries.

5. In most cases, the only task that you will need to complete is to report the results on the “Results, Analysis, Improvements” tab. After switching to this tab, click on the green plus icon at the far right of the header and enter information into the appropriate fields. Click Save, then Close.

6. On the next page, click Close again to go back to the list of all outcomes.

7. Repeat the steps above for all outcomes with active status.

For more details on navigating the IE Portal, please see the IE Portal User Guides.

How do I access or request access to the IE Portal?

1. If you do not yet have access to the IE Portal and need it to enter, edit, or review the assessment reports, email ipa@fsu.edu to request access to the appropriate degree and/or certificate program(s).

2. Once you have access to the IE Portal, navigate to iep.fsu.edu. We recommend using Chrome as the browser.

3. Login with your FSU employee credentials when prompted. If you are already logged in to another FSU application, you will be taken directly to the Home page.

4. From the Home page, use the drop-down menu at the top of the page to switch between programs, and the navigation menu in the upper left corner to access different pages where you can view and enter assessment information.

For further steps on navigating the IE Portal, please see the IE Portal User Guides.

Can I schedule a meeting with the IPA Office staff?

Yes! In addition to our regularly scheduled annual University-wide seminars and trainings, the IPA Office is available to meet with your and/or your faculty and staff one-on-one for a consultation/working meeting or an IE Portal session. To expedite the scheduling process, please sign-up for an available time slot here.

What is the difference between general education assessment and educational program assessment?

While both General Education assessment and Educational Program assessment are aimed to improve student learning outcomes, they differ in terms of scope, focus, and level of analysis. General Education assessment takes place at the level of individual General Education courses, which may fall outside of a student’s direct field of study and are designed to develop well-rounded individuals. By contrast, Educational Program assessment takes place at the level of a specific degree or certificate program and typically includes only upper-level courses required for learning specialized knowledge and skills.

General Education refers to the foundational knowledge and skills that students are expected to acquire across a broad range of disciplines (English Composition, Natural Science, Social Science, Humanities and Cultural Practices, Ethics, and Quantitative and Logical Thinking). Here, assessment is focused on evaluating whether students have achieved the desired learning outcomes in each of these specific areas. At FSU, assessment of Genera Education learning outcomes takes place on a 3-year cycle and is facilitated by the IPA Office and the Office of Liberal Studies.

Educational Program assessment is focused on evaluating the student learning outcomes and effectiveness of specific degree and certificate programs. Its goal is to examine whether students in a particular program are achieving the intended educational goals and acquiring the necessary knowledge, skills, and values for their field of study. Educational Program assessment occurs on an annual basis, with assessment reports being documented in the university’s IE Portal.

What is the appropriate number of Program and Student Learning Outcomes?

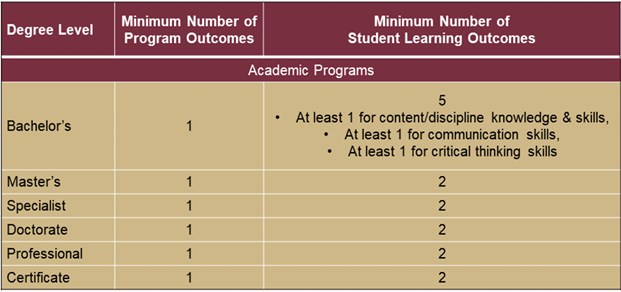

Each Academic/Student Support Services (A&SSS) and Administrative Support unit is required to formulate and actively advance at least two Program Outcomes in any given academic/fiscal year.

Each educational program (academic degree or certificate) is required to formulate at least 1 Program Outcome (PO) and at least 2 Student Learning Outcomes (SLOs), with the exception of Bachelor's degrees. At the Bachelor's level, due to increased accountability for undergraduate educational outcomes, programs are requested to articulate at least 5 SLOs, 3 of which must be assessed in 3000-4000 level courses and focus on 3 different categories from the following list: content/discipline knowledge and skills, communications skills, critical thinking skills.

What are the best practices for Improvement Actions of educational programs?

The most intensive part of the assessment process is devising plans for, and executing changes to, teaching and learning. Formulating sound improvement plans requires participation, engagement, and meaningful contribution on the part of instructional faculty and curriculum committees. Whether SLOs have been met or not, program faculty and leadership need to determine a plan of action for the next year.

Occasionally, the level of student learning does not meet the desired acceptable level of mastery, or the threshold for the proportion of students meeting the learning goal, or both. In this case, academic programs should examine potential reasons for why the standard for success was not met and then develop a set of enhancements to be put in place in the upcoming year(s). These plans should be based on the learning outcomes data and describe specific new and/or different changes to be implemented, including revising instructional materials, adding or removing topics from taught content, incorporating more hands-on activities, etc. Improvement plans may also require new or modified assessment practices or professional development. Importantly, “[p]lans to make improvements do not qualify as seeking improvement, but efforts to improve a program that may not have been entirely successful certainly do.” (SACSCOC Resource Manual, p. 69).

In cases when SLOs are being consistently achieved at a high level for several years, it is recommended to either increase the standards success or to add an SLO that would address other important learning outcomes. If these changes are not feasible, academic programs should consider how they expect to maintain high level of student learning.

Most improvement actions undertaken by educational programs fall into four categories, which should be considered and implemented one after another:

| FIRST: Refinements to the way learning outcomes are assessed | Because any changes to teaching and learning should be made based on reliable and valid data, which comes from a well-thought-out assessment methodology, so a strong assessment design should be first |

| SECOND: Changes to how target content and skills are taught and practiced | Analysis of robust, rich, accurate student learning data should inform and logically lead to any, small or large, changes to the instructional process |

| THIRD: Adjusting expectations for demonstrated levels of learning | Raising or lowering of the SLO numeric targets should happen after we made all feasible improvements to teaching and curriculum in response to robust evidence |

| FOURTH: Updating learning outcomes for the program | ‘Retiring’ existing learning outcomes should happen rarely and typically only after the three approaches to improvement above have been exhausted; however, new learning outcomes can be introduced at any point |

The Quick Guide for SLOs Improvement Actions lists some of the most common changes that educational programs choose to pursue based on their analysis of student learning data.

Can I use final course grades to assess Student Learning Outcomes?

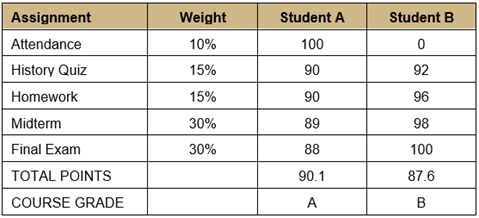

A very important aspect of designing a strong assessment methodology is to avoid using final course grades to assess SLOs. Using final course letter grades and/or final overall points does not allow for distinguishing how well the student learnt different, separate sets of content, skills, and values/attitudes. Furthermore, not only are final course grades based on student demonstration of multiple different knowledge and skill sets, but they also often include non-academic elements, such as class participation and attendance/tardiness. In addition, course final grades may be curved, which makes them even less accurate in gauging student learning outcomes. (See pages 10-11 in Suskie, 2009 for further discussion.)

The table below illustrates how one student who better mastered course content nonetheless received a lower overall grade because they did not receive points for consistent attendance. Even if the instructor excludes attendance from the final grade calculation, it would still be impossible to discern if two students who received As have learnt the same content/skills to the same level of mastery. Maybe Student A correctly answered all midterm and final exam questions on disciplinary models and theories definitions but struggled with questions on how these models and theories apply to real-world scenarios, while Student B demonstrated higher mastery of the application skills but was confusing some definitions and terms that required memorization.

How do I assess and report SLOs from small educational programs?

When evidence of learning comes from small groups of students, it can be difficult to draw definitive conclusions. One or two students who by chance demonstrate levels of learning that are too high or too low can skew the overall results of the entire group of 5- 10 students. In these cases, the Office of IPA recommends collecting SLO data from students in successive cohorts.

For the purposes of IE reporting, the minimum number of students whose SLO results are aggregated should be 10 for undergraduate programs and 5 for graduate programs. In cases when there are fewer than 10 or 5 students, respectively, in a given reporting period, educational programs should wait till the end of the next year(s) and combine the data. If there are fewer than 10 undergraduate or 5 graduate students who participated in assessment following aggregation over a 3-year period, programs should report using all available outcomes data, even for samples under the desired headcount.

While waiting for the sufficient amount of evidence to accumulate, programs should collect learning outcomes data every year and securely store it. When completing mandatory annual reporting of SLO results in the IE Portal, programs are advised to submit statements along the lines of those below:

Results Statement field: There were 3 students who participated in assessment of this SLO in 2022-2023 academic year.

Analysis of Results: Due to student privacy and sampling considerations, students’ results for this program are not reported or analyzed.

Improvement Action(s): Because there were fewer than 10 unique students in this academic year who participated in this SLO assessment, we will wait until the end of the 2023-2024 year and combine the data to report results, provide an analysis and formulate improvement actions. Hopefully, by then, there will be a sufficient number of students to include.

How do I conduct and report assessment for educational programs offered in multiple locations and/or modes of delivery?

Educational programs that are offered on multiple campuses (Tallahassee, Florida; Panama City, Florida; Sarasota, Florida; Panama City, Republic of Panama) and/or in multiple modes of delivery (face-to-face and distance learning/online) are expected to have the same SLOs, but a separate set of annually reported results for each active location/modality in which the program is delivered. This expectation comports with SACSCOC’s requirement that an “institution does have an obligation to establish comparability of instruction across locations and modes.” (SACSCOC Resource Manual, p. 182)

For example, if the students enrolled in the face-to-face version of the program at the Tallahassee campus demonstrated levels of learning that are substantially lower from the SLO levels of students enrolled in the same degree program delivered online, the sources for this discrepancy should be analyzed and addressed. Perhaps, there is an issue that is only present in the face-to-face version of the program; in this case, the Improvement Action(s) plan for the face-to-face modality should explain how this issue will be corrected – for that specific mode of delivery, not both.

Most of the time, levels of student learning will be comparable across the different locations/modalities. In these cases, when completing assessment reports, the narratives for the Analysis of Results and Improvement Action(s) may be similar or identical. Please follow the instructions in the IE Portal User Guide when reporting on outcomes of students ‘belonging’ to different program locations and modes.

Importantly, location/modality determination is based on the academic plan assigned to the student in the Registrar’s database, not on the location or modality of the course in which SLO assessment takes place. Many students enrolled in the face-to-face programs take a few online courses throughout their academic careers, but this does not mean that they belong to an online program. The Office of IPA is available to consult and provide assistance in disaggregating SLO data by program location/modality.

Do I need to include summer in outcomes assessment and reporting for educational programs?

The answer to the question of when to gather evidence of student learning depends on the courses and learning experiences chosen for assessment of SLOs.

- If a given SLO is based on student learning data that comes from a course only taught in Fall and Spring, then Summer does not need to be included.

- If data for a given SLO assessment comes from a course taught in all three semesters, it is up to the instructors to decide whether they want to include summer terms. Usually, this decision is based on the size of student enrollment. If there are comparatively few students enrolled in Summer A, B and/or C courses, these terms may be omitted. In this case, the rationale for exclusion should be clearly stated in the ‘Description of Assessment Plan’ field in the IE Portal. Sample statement: “This SLO is assessed based on student learning data obtained in course X. Although this course is taught throughout the academic year, over 90% of students take it in Fall and Spring. To expedite the data collection process without losing significant amount of student scores data, we decided to not include SLO data from the summer terms.”

- If there is sizable student enrollment in summer course(s) used in SLO assessment and/or if program faculty prefer to include all student learning data in outcomes analysis, instructors need to come to agreement whether the leading or the trailing Summer is included in the definition of the academic year (Summer, Fall, Spring vs. Fall, Spring, Summer). IPA recommends basing this choice on the timeline for SLO data aggregation and analysis: educational programs that study learning outcomes data in May should use ‘Summer, Fall, Spring’ approach, and educational programs that collect and analyze SLO data in August/September, should define their academic year for assessment purposes as ‘Fall, Spring, Summer’.

What are examples of good Program Outcomes for educational programs?

In addition to Student Learning Outcomes, each educational program at FSU is required to develop, track, and improve Program Outcomes. As opposed to Student Learning Outcomes, which focus on the knowledge and skills that students should learn, Program Outcomes are non-curricular goals of the academic unit. When choosing new Program Outcomes and/or improving their assessment processes, please consider selecting one of the recommended options in our Recommended Program Outcomes Guide. You may adapt the Program Outcome plan to use as is or adjust any part of it to meet your specific program’s needs.

How do I access data for Program Outcomes assessment?

In many cases, utilizing the Institutional Research data dashboards linked in the Recommended Program Outcomes Quick Guide will be sufficient for assessment and reporting.

The Oracle Business Intelligence (OBI) data warehouse provides another venue for accessing centralized data. There are several OBI dashboards created for internal users that offer frequently requested aggregated and student-level data, among which are the Graduation Analysis dashboard and the Student Academic Plan Summary dashboard. To request access to them, follow the step-by-step instructions in Requesting OBI Role Access. After your OBI access is granted, please follow the instructions below to navigate the dashboards and extract needed data:

Office of Institutional Research also offers classes for users interested in creating customized data queries in OBI. For articles on getting started, dashboards, and subject areas available for creating custom queries, visit the myFSU BI Knowledge Base.